If you are active in tech communities on socials like LinkedIn, YouTube and X, there's a high chance that your feed is flooded with posts about MCP too. This was my state as well a few days ago, so I decided to dive in. Here, I'm going to summarize everything I could find and understand until now.

TL;DR

MCP is not just another API; it's a new standard for injecting structured context into AI models. Think of it as a USB-C port for AI, allowing seamless and standardized integration with data sources, tools, and workflows. Why is it innovative? Because AI models are often context-blind, struggling to leverage real-world information effectively. MCP fixes this by bridging data silos, improving security, and ensuring model-agnostic, reusable integrations. For devs and AI engineers, this means simplified integrations, better model performance, faster development, and future-proof AI architectures.

What's the Big Deal?

Many assume MCP is just another API, but it's much more than that. If you're picturing REST endpoints and JSON payloads, think bigger.. MCP is a protocol for making AI models more independent by giving them the right context about tools available to it and put model's reasoning abilities to good use by letting it decide what tool it need and when.

Why is Context so important?

AI models today are incredibly capable, but they often struggle because they don't always have the right context to work with. In frameworks like LangChain, we tackled this by giving models tools and teaching them when and how to use them. MCP takes this further by structuring context from the start, so models don't have to rely solely on vague system prompts or tool descriptions.

More Context ~= Better output + Accuracy from LLMs

Here's a good analogy: Think of asking a friend for homework help. Instead of just saying, “How do I solve this?” you'd explain:

- “Hey, I'm working on algebra homework.” (Domain)

- “We're learning about quadratic equations.” (Specific Topic)

- “I need to solve x² + 5x + 6 = 0.” (Task)

- “Can you walk me through the steps?” (Request)

With traditional ways, AI models often get a prompt and then based on the context from system prompt, it tries to figure out which tools to use and how to use them.

MCP changes this by providing structured context upfront, so models can make more informed decisions about which tools to use and how to use them.

The Problem with Current AI Tool Use

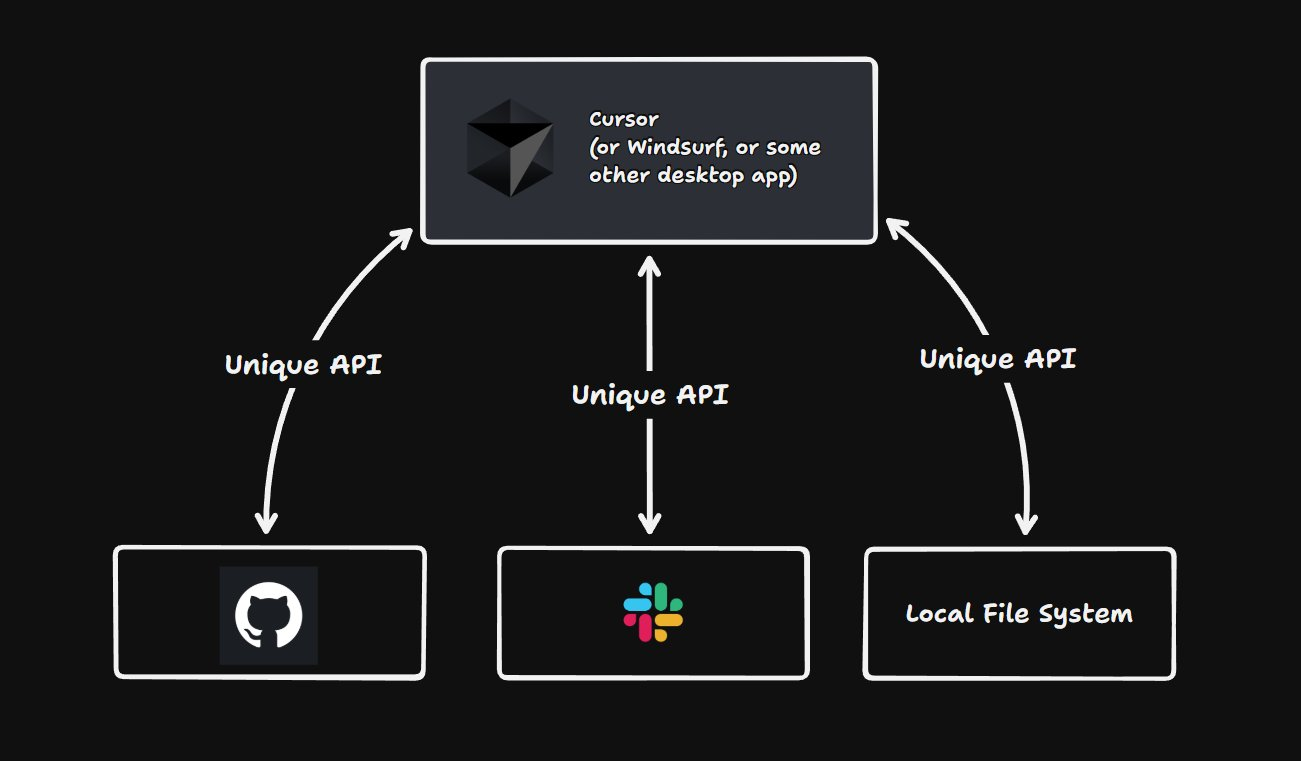

Image credit: Matt Pocock

Right now, AI models don't inherently know how to use external tools, they have to be taught. The typical approach involves building custom data access tools, crafting prompt (like docstrings) on when and how to use them, and then attaching these tools to the LLM through a system prompt. The assumption is that the model will figure out the right tool to use based on the user's query.

But this method has some serious downsides:

Glue Code Overload: Developers need to write a lot of extra code to ensure tools work as expected.

Prompt Engineering Hassles: LLMs rely on carefully worded system prompts to understand how to use tools, which can be tricky to get right.

Tight Coupling: If a tool changes, its documentation, system prompt, and implementation all needs updating, creating long-term maintenance headaches.

How MCP propose a better way to fix this?

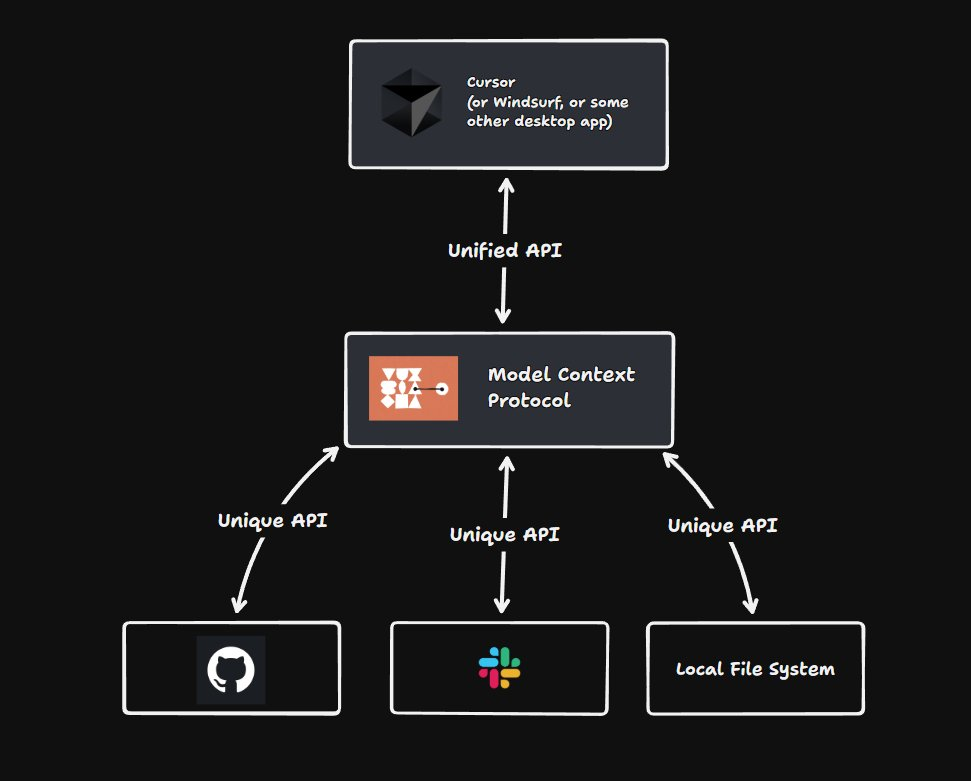

Image credit: Matt Pocock

MCP removes the guesswork by standardizing tool and data access, so developers don't have to reinvent the wheel every time they integrate a new source. Instead of manually wiring everything together, MCP provides a structured approach where tools can be created once and reused across multiple AI agents or applications.

With MCP, AI models get structured context upfront, eliminating ambiguity and reducing the reliance on system prompts. Instead of expecting the model to infer everything from vague instructions, we make sure all the key details are available from the start.

Bridging Data Silos: One big challenge in enterprise AI? Important data is scattered everywhere. Some info is in databases, some in cloud storage, some buried in Slack messages. MCP helps AI access and use data from multiple sources in a unified way, so models aren't just relying on bits and pieces, they get the full picture.

Works with Any AI Model: MCP isn't tied to one AI model. Whether you're using Claude, GPT, or your own custom LLM, MCP makes context handling consistent across different models and tools. Plus, its modular design means it plays nicely with different data sources and systems.

Security and Access Control: Handling sensitive enterprise data? MCP bakes security into its design. It provides controlled access to data and tools, ensuring that AI models only access what they're supposed to.

Pre-Built Integrations: To make life easier, MCP comes with ready-to-use connectors for platforms like Google Drive, Slack, GitHub, and databases. This helps developers quickly link AI models to industry standard data sources without reinventing the wheel.

How to get started with MCP?

MCP is still new, but since it's an open standard, you can already start experimenting with it. Here are a few resources to dive in:

Read the official Docs: Check out Anthropic's documentation for a deep dive into MCP's architecture.

Try Out Pre-Built MCP Servers: Since MCP is open-source, npm-like registries are emerging with pre-built MCP servers for many of the tools we use daily. Here are some of the most useful ones I've come across so far: smithery, mcp-get, glama, mcp.so.

Build Your Own MCP Server: Follow the quick start guide to create custom MCP servers tailored to your needs.

Final Thoughts

MCP is still in its early days, and while it promises a smarter way to connect AI with real-world data sources, it's too soon to say how much of an impact it will have. On the innovation side, MCP's approach to standardizing context for AI is a major step forward it could lead to better model accuracy, easier integrations, and more seamless automation. The pre-built connectors and modular framework make it appealing for developers looking to streamline workflows.

But there are still open questions. Will companies widely adopt it? Can it scale efficiently across different AI models and enterprise systems? Right now, it's an interesting idea with a lot of potential, but we'll have to see how it evolves. If you're in the AI space, it's worth keeping an eye on and experimenting with, but whether it will truly change the agentic ai game? Guess we'll just have to twiddle our thumbs and see.