Whether you've been working with LLMs or not, you've probably heard the terms "Agent" or "Agentic AI" thrown around a lot. After spending months building projects (both hobby and production), consuming a ton of content, and working daily with agentic frameworks like LangGraph and Google Vertex AI Engine, I have some thoughts.

TL;DR

AI agents are powerful tools for tasks requiring adaptability and large-scale automation, but they are often overused. They shine in scenarios where flexibility and dynamic decision-making are essential, such as automating complex workflows or handling unpredictable inputs. However, for simpler, more predictable tasks, workflows or single LLM calls are often more efficient and reliable. The key is to start with straightforward solutions, measure their effectiveness, and only introduce agentic systems when the added complexity delivers clear, measurable benefits.

Why are AI Agents so hyped?

With rapid advancements in generative AI, LLMs are unlocking automation opportunities that were previously impossible with traditional algorithms. Their dynamic reasoning and problem-solving abilities have sparked excitement among developers and companies eager to integrate these capabilities into their software to automate repetitive tasks like updating documentation or reviewing PRs. Even coding has become semi-automated, validating claims from AI giants like OpenAI, Anthropic, and Google about LLMs' potential to assist with trivial tasks. Agentic AI takes this further by aiming to fully automate such processes, which, as a software engineer, I completely understand the urge to pursue. However, this enthusiasm has also led to widespread FOMO, causing AI agents to be applied to problems where simpler solutions would suffice it's like using a bazooka to kill a fly.

Workflows vs Agents what's the difference?

Workflows

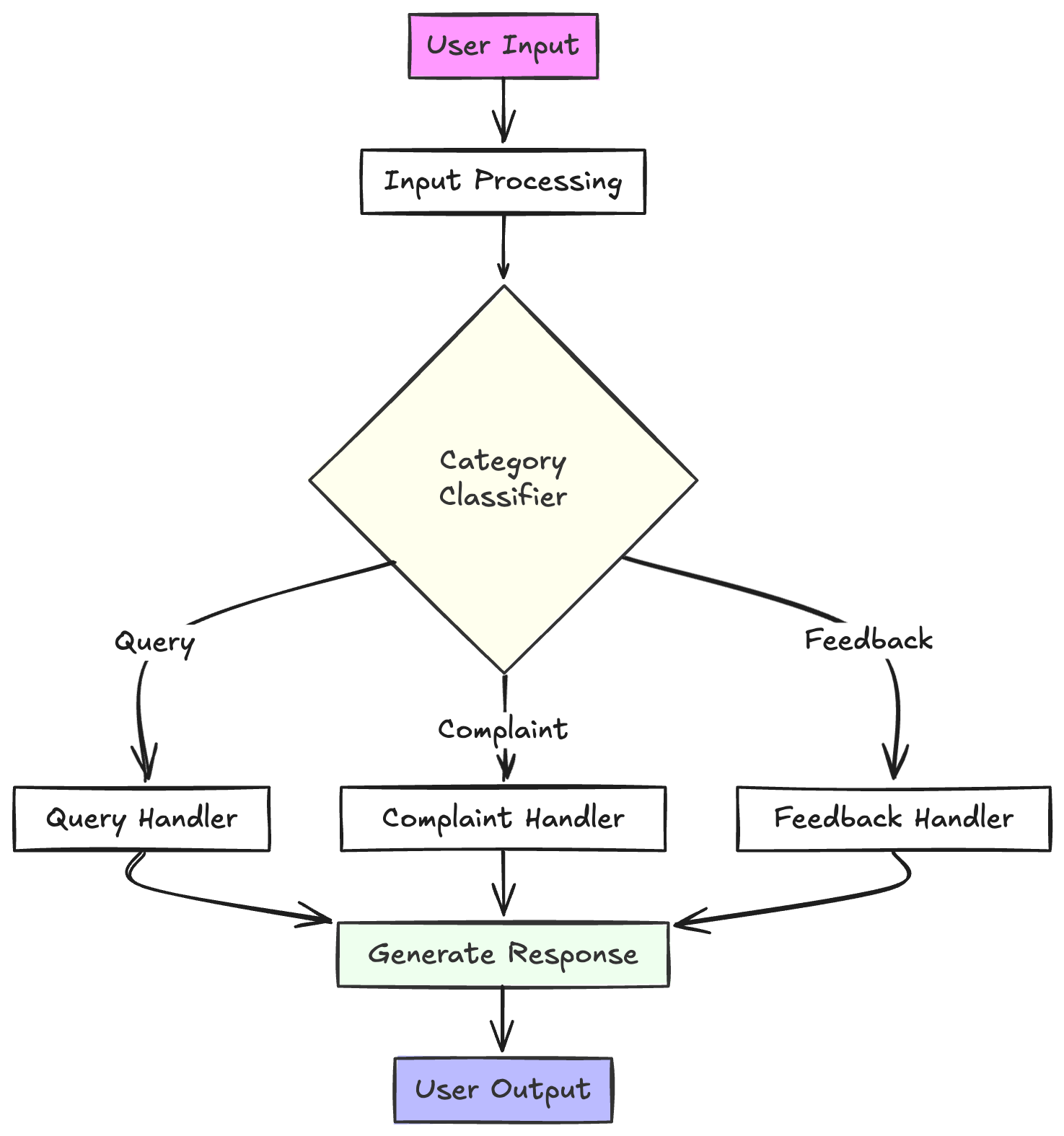

Workflows are predefined sequences where LLMs follow strict code paths, making them predictable and structured. Each step is designed with a clear goal in mind, ensuring that the system operates in a controlled, deterministic way. For example, if a user submits a prompt, a workflow could categorize it into "query," "complaint," "feedback," or "information" and then return a predefined response accordingly. This approach is ideal for cases where consistency and reliability are more important than flexibility.

Agents

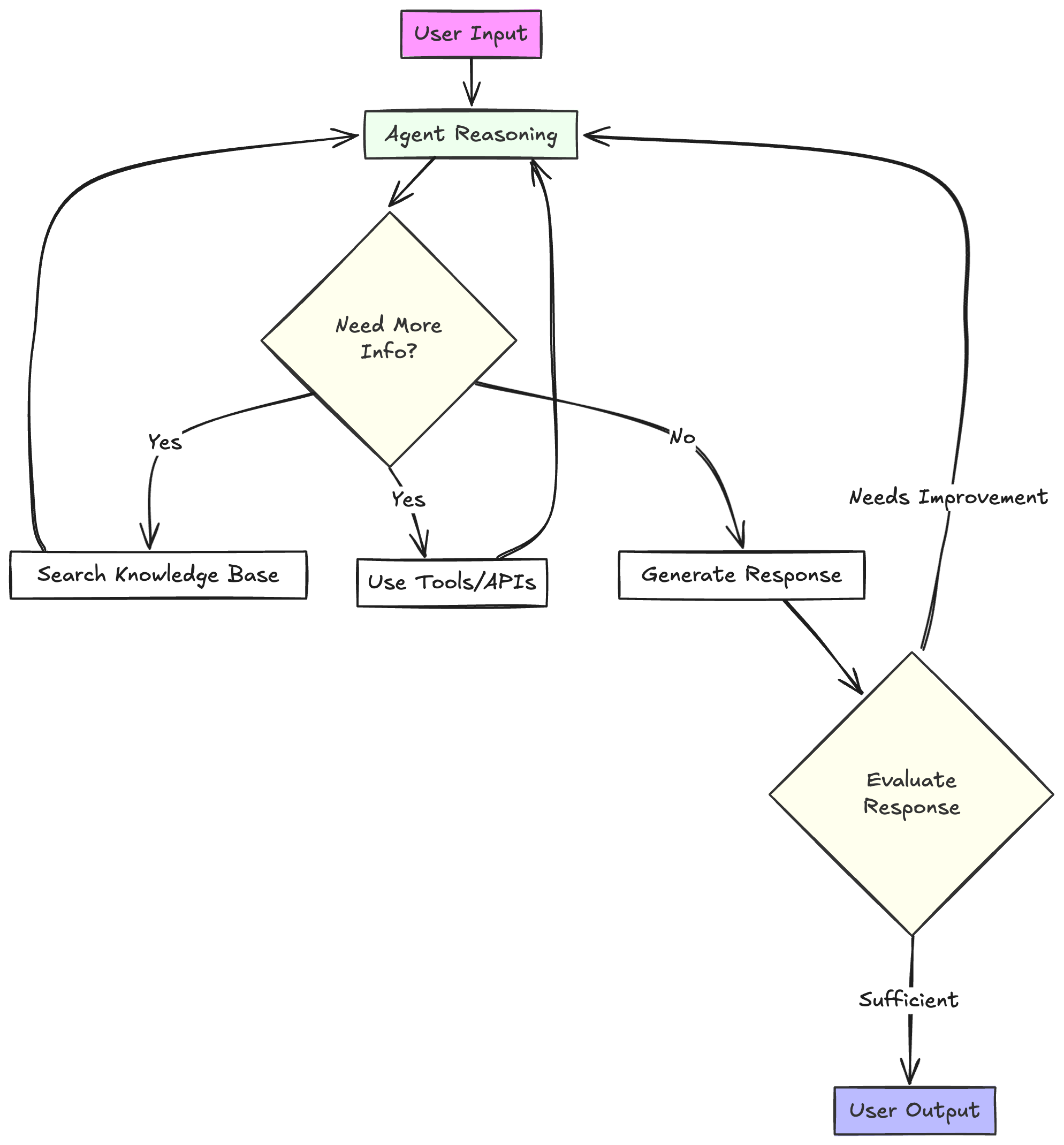

Agents, on the other hand, take a more dynamic and flexible approach. Instead of following a predetermined path, they make real-time decisions about how to retrieve and use information iteratively. This makes them non-deterministic, allowing them to adapt based on context and available resources. For instance, rather than simply classifying a user's prompt, an agent might explore multiple data sources, combine relevant information, and construct a tailored response. This makes agents powerful for complex tasks but also introduces challenges in predictability and control.

When to use Agents?

Using agents make sense when even small time savings provide value, such as automating expense report processing where an AI agent can scan receipts, categorize expenses, and generate a report which is something that would take an employee several minutes per receipt. This automation accumulates over hundreds of reports, significantly reducing manual effort. They also excel when flexibility and large-scale decision-making are crucial, allowing them to adapt dynamically instead of following rigid paths. However, this comes at a cost of trading efficiency and latency for improved performance, so they should only be used when that tradeoff is justified.

When to NOT use Agents?

Agents are a poor choice when user inputs are too ambiguous, making it hard to verify their outputs, like automating a legal contract review where the nuances of language and intent require human expertise, or trying to generate personalized investment strategies where subjective judgment plays a critical role. In such cases, even minor misinterpretations can lead to costly errors. When tasks require strict reliability, workflows are the better option, predictability and consistency are also key factors to ensure that. For many applications, a well-optimized single LLM call with retrieval and in-context learning is often sufficient to achieve the desired outcome without unnecessary complexity.

Final Thoughts

My hot take? 2025 will be the year we move from "agentic" to "multi-agent" systems, where multiple specialized AI agents collaborate to handle complex workflows efficiently. As exciting as this shift is, it's crucial to measure results at every stage to avoid unnecessary complexity and cost. The best approach is to start with simple LLM-powered automation, monitor its effectiveness, and only introduce agentic behavior when it provides clear, measurable benefits.